LLM 0.26: Large Language Models Get Terminal Tooling

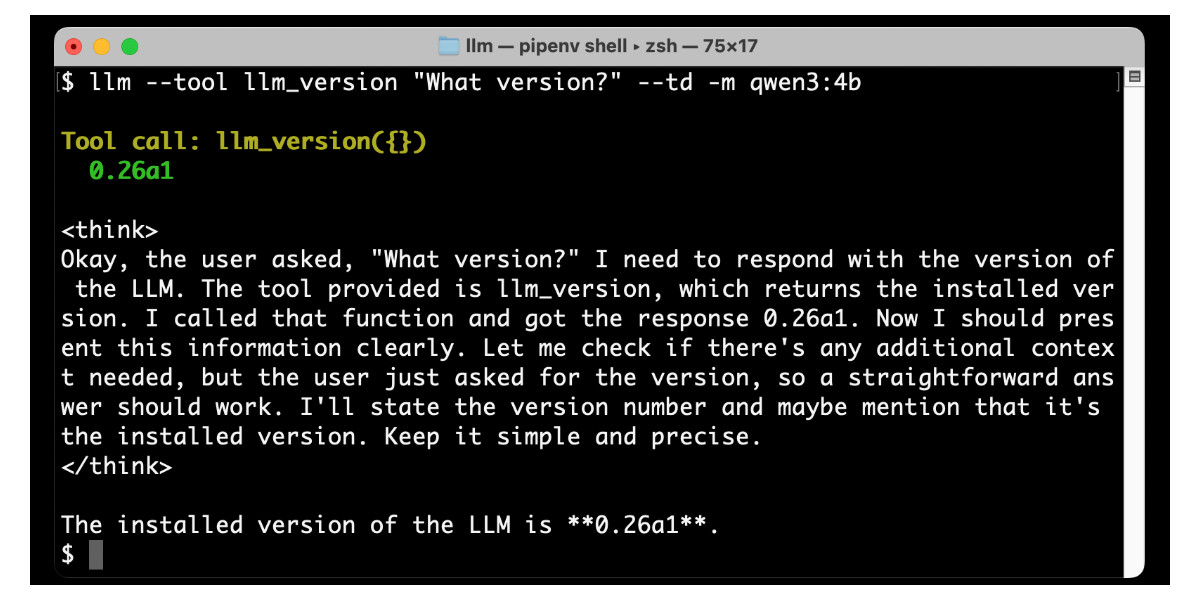

LLM 0.26 is out, bringing the biggest feature since the project started: tool support. The LLM CLI and Python library now let you give LLMs from OpenAI, Anthropic, Gemini, and local Ollama models access to any tool representable as a Python function. The article details installing and using tool plugins, running tools via the command line or Python API, and shows examples with OpenAI, Anthropic, Gemini, and even the tiny Qwen-3 model. Beyond built-in tools, custom plugins like simpleeval (for math), quickjs (for JavaScript), and sqlite (for database queries) are showcased. This tool support addresses LLM weaknesses like mathematical calculations, dramatically expanding capabilities and opening up possibilities for powerful AI applications.