Apple Paper Delivers a Blow to LLMs: Tower of Hanoi Exposes Limitations

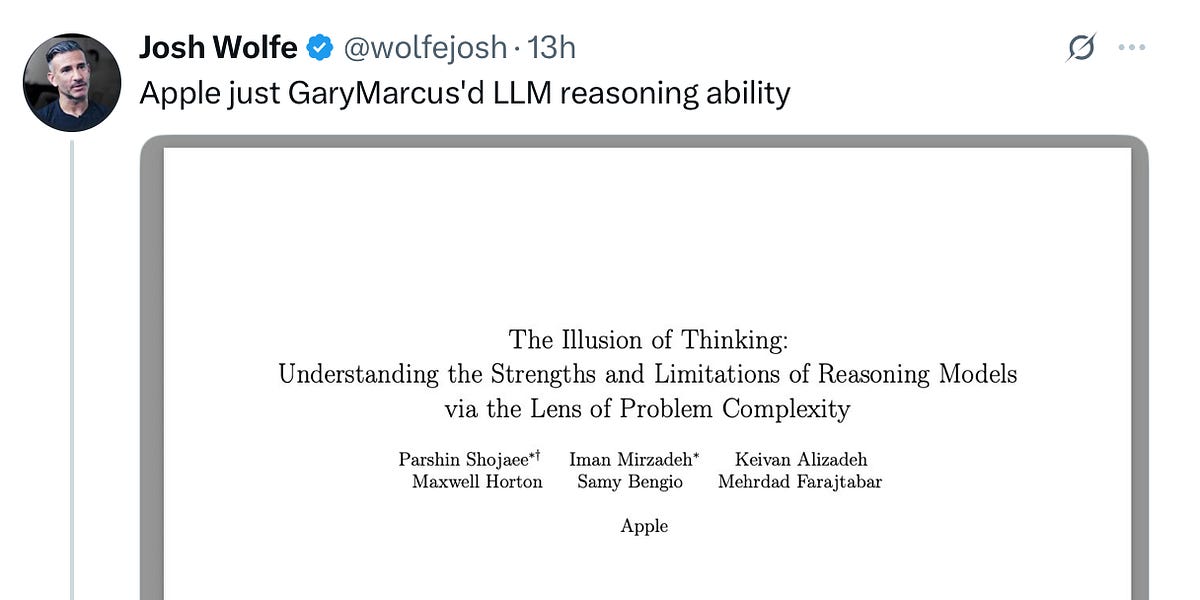

A new paper from Apple has sent ripples through the AI community. The paper demonstrates that even the latest generation of "reasoning models" fail to reliably solve the classic Tower of Hanoi problem, exposing a critical flaw in the reasoning capabilities of Large Language Models (LLMs). This aligns with the long-standing critiques from researchers like Gary Marcus and Subbarao Kambhampati, who have highlighted the limited generalization abilities of LLMs. The paper shows that even when provided with the solution algorithm, LLMs still fail to solve the problem effectively, suggesting their "reasoning process" isn't genuine logical reasoning. This indicates that LLMs are not a direct path to Artificial General Intelligence (AGI), and their applications need careful consideration.