Dissecting a Minimalist Transformer: Unveiling the Inner Workings of LLMs with 10k Parameters

2025-09-04

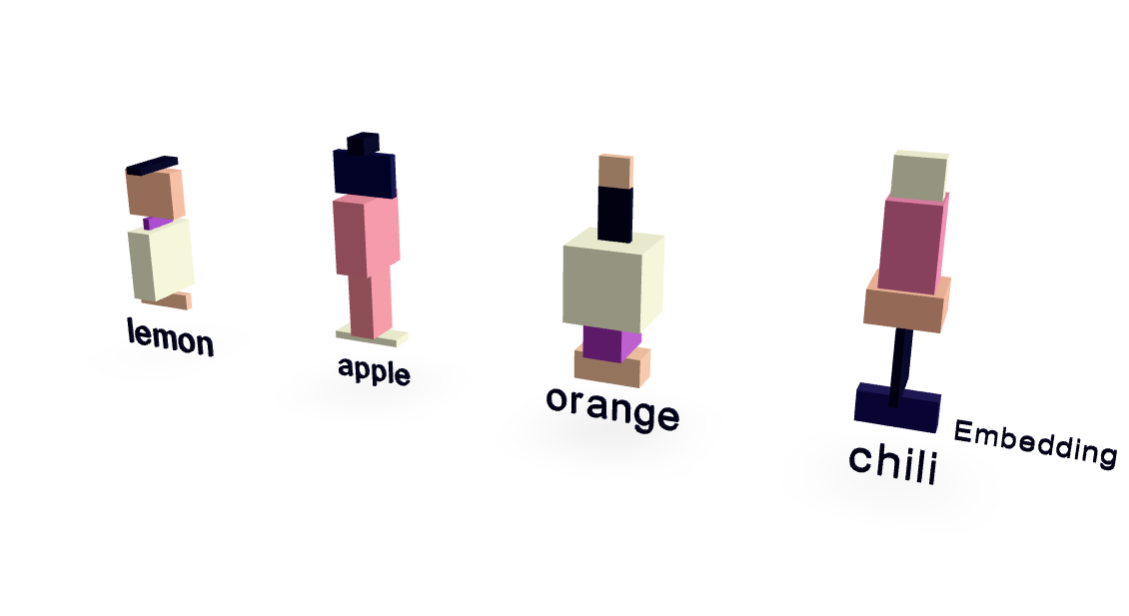

This paper presents a radically simplified Transformer model with only ~10,000 parameters, offering a clear window into the inner workings of large language models (LLMs). Using a minimal dataset focused on fruit and taste relationships, the authors achieve surprisingly strong performance. Visualizations reveal how word embeddings and the attention mechanism function. Crucially, the model generalizes beyond memorization, correctly predicting "chili" when prompted with "I like spicy so I like", demonstrating the core principles of LLM operation in a highly accessible manner.

AI