The Great LLM Hype: Benchmarks vs. Reality

2025-04-06

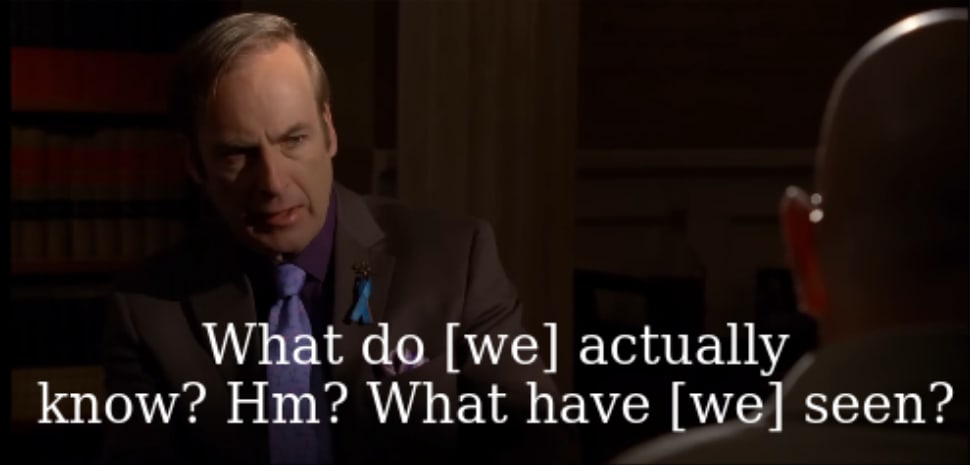

A startup using AI models for code security scanning found limited practical improvements despite rising benchmark scores since June 2024. The author argues that advancements in large language models haven't translated into economic usefulness or generalizability, contradicting public claims. This raises concerns about AI model evaluation methods and potential exaggeration of capabilities by AI labs. The author advocates for focusing on real-world application performance over benchmark scores and highlights the need for robust evaluation before deploying AI in societal contexts.