Bitwig Studio 6 Beta Focuses on Editing and Automation

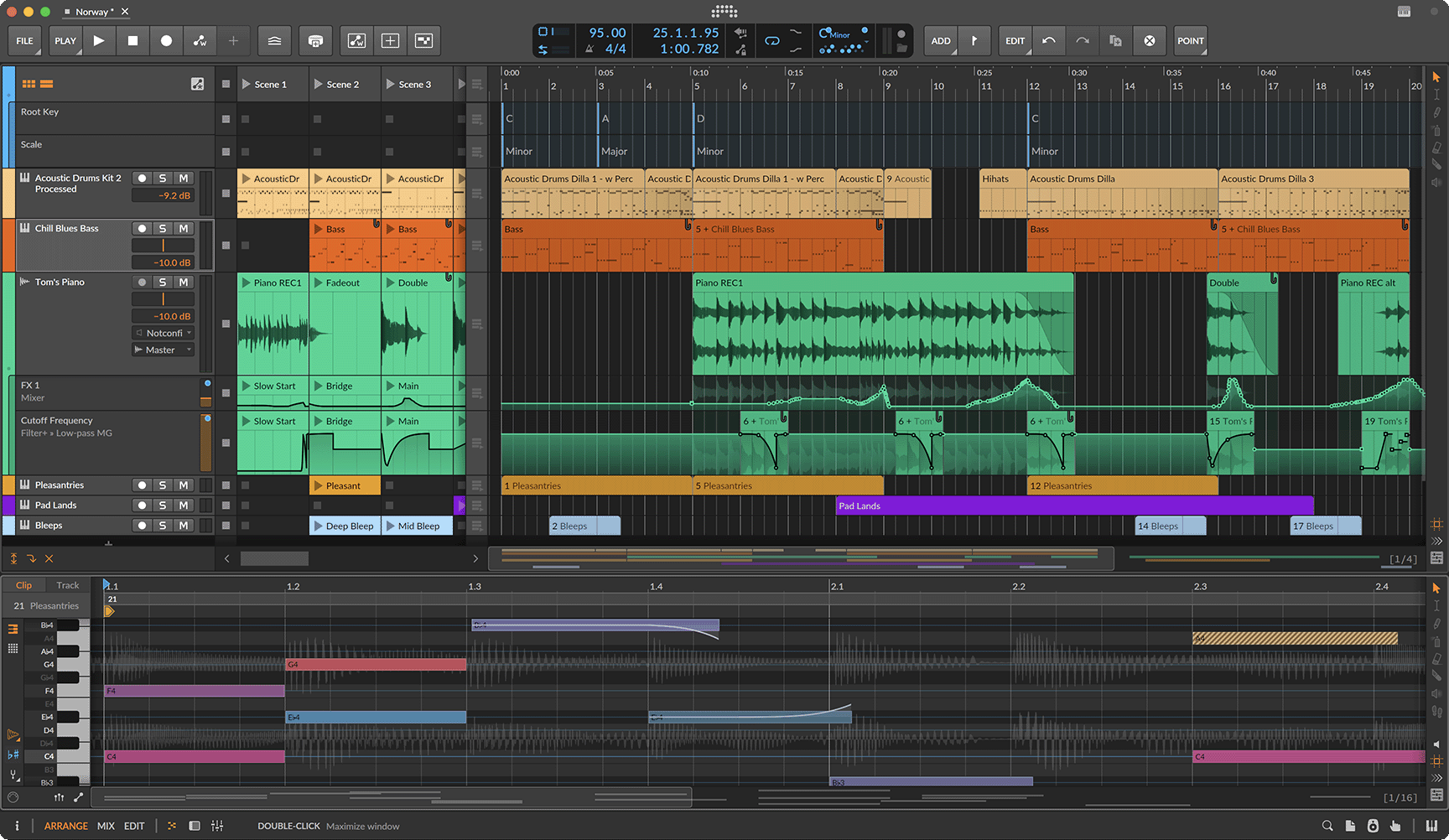

Bitwig Studio 6 beta is out now, focusing on enhancing editing and automation workflows rather than AI or gimmicky features. New features include an Automation Mode, improved editing gestures, automation clips, project-wide key signatures, and a refreshed UI. This update delivers significant improvements to the editing experience, addressing long-standing requests from engineers and users.

Read more