Stop Building AI Agents!

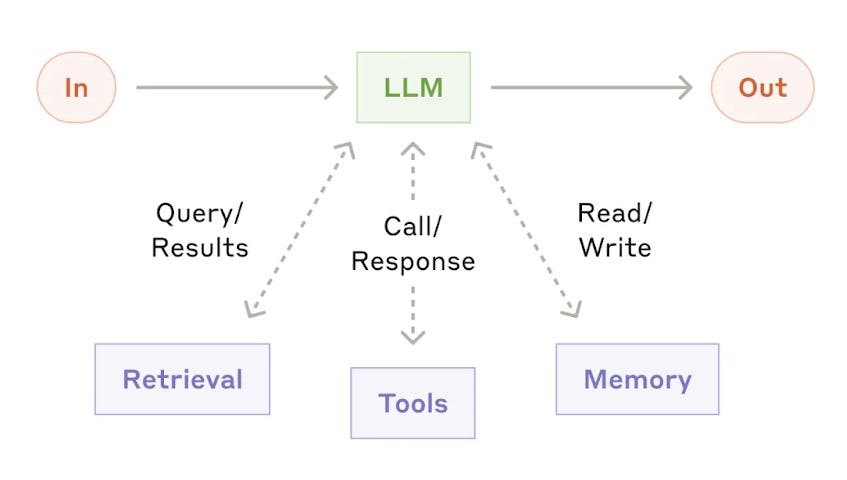

Hugo, an expert advising teams at Netflix, Meta, and the US Air Force on building LLM-powered systems, argues that many teams prematurely adopt AI agents, resulting in complex, hard-to-debug systems. He contends that simpler workflows like chaining, parallel processing, routing, and orchestrator-worker patterns are often more effective than complex agents. Agents are only the right tool when dealing with dynamic workflows requiring memory, delegation, and planning. The author shares five LLM workflow patterns and emphasizes the importance of building observable and controllable systems. Avoid agents for stable enterprise systems; they are better suited for human-in-the-loop scenarios.

Read more