A Senior Data Scientist's Pragmatic Take on Generative AI

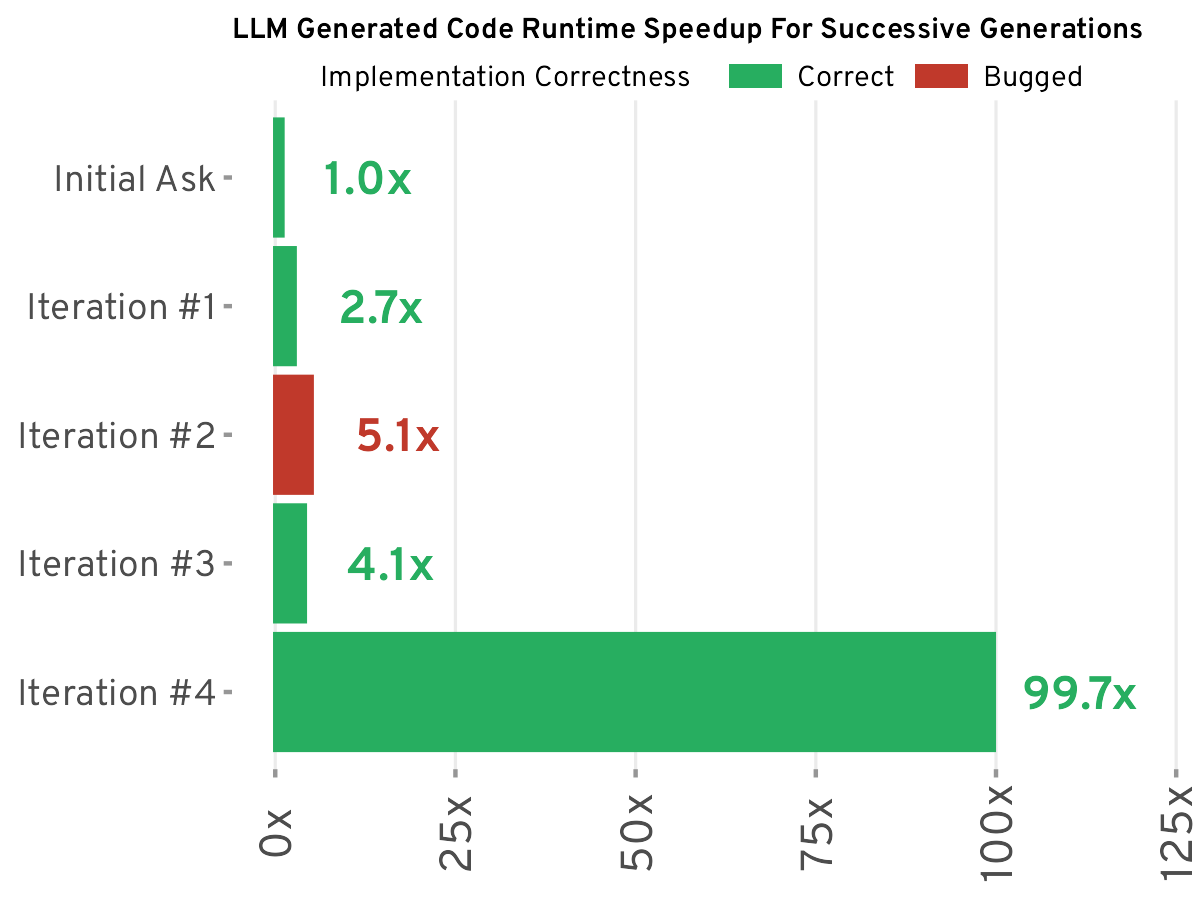

A senior data scientist at BuzzFeed shares his pragmatic approach to using large language models (LLMs). He doesn't view LLMs as a silver bullet but rather as a tool to enhance efficiency, highlighting the importance of prompt engineering. The article details his successful use of LLMs for tasks like data categorization, text summarization, and code generation, while also acknowledging their limitations, particularly in complex data science scenarios where accuracy and efficiency can suffer. He argues that LLMs are not a panacea but, when used judiciously, can significantly boost productivity. The key lies in selecting the right tool for the job.

Read more