Can Iterative Prompting Make LLMs Write Better Code?

2025-01-03

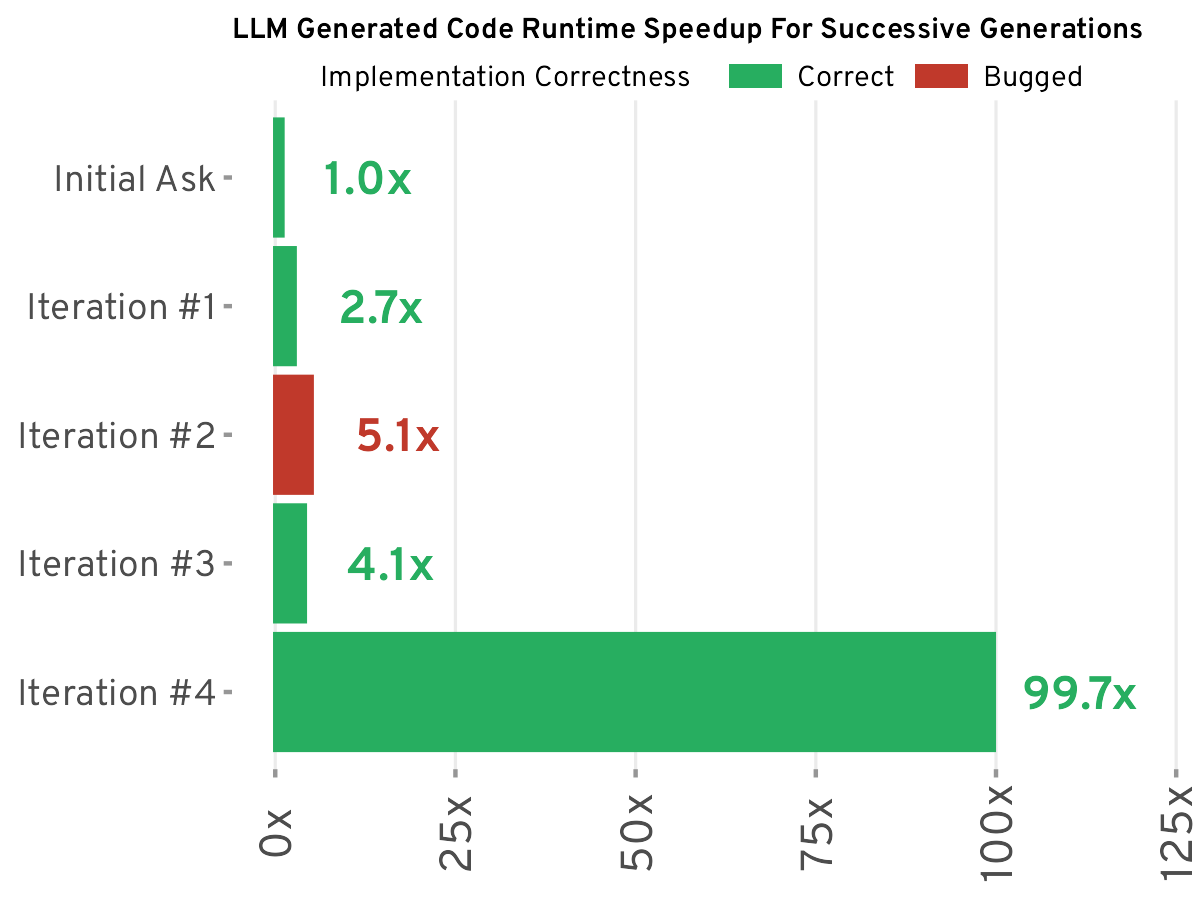

This blog post details an experiment exploring whether repeatedly prompting a Large Language Model (LLM) to "write better code" improves code quality. Using Claude 3.5 Sonnet, the author starts with a simple Python coding problem and iteratively prompts the LLM. Performance improves dramatically, achieving a 100x speedup. However, simple iterative prompting leads to over-engineering. Precise prompt engineering yields far more efficient code. The experiment shows LLMs can assist code optimization, but human intervention and expertise remain crucial for quality and efficiency.