DeepSeek's AI Breakthrough: Bypassing CUDA for 10x Efficiency

2025-01-29

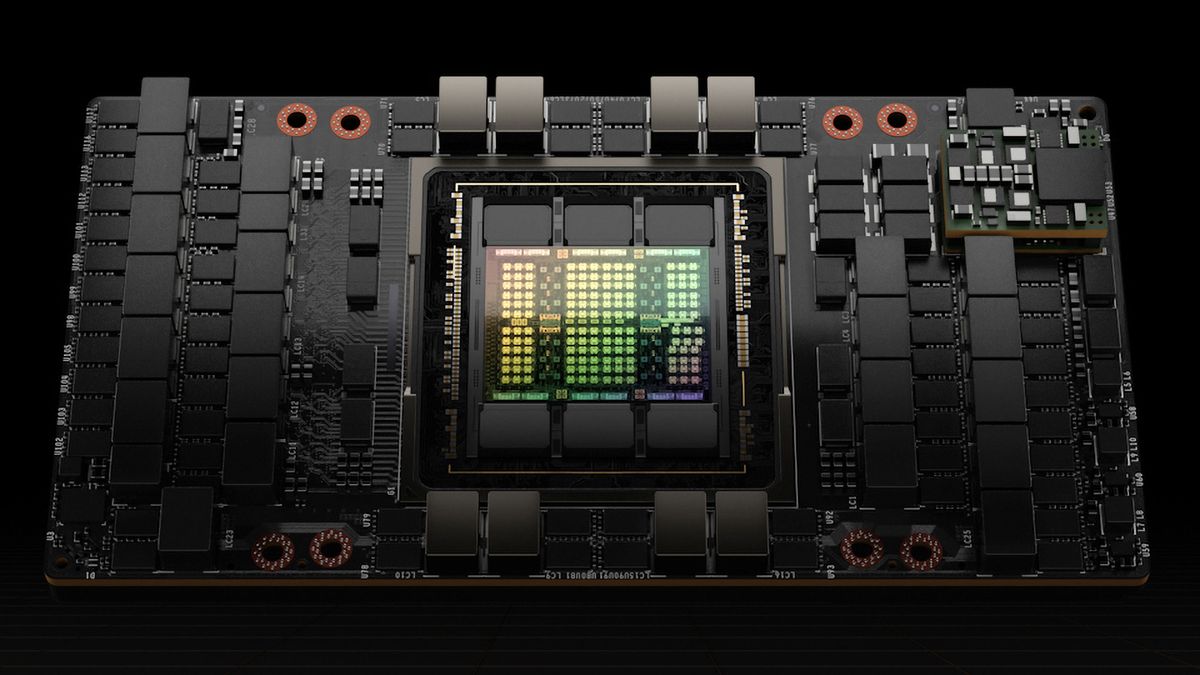

DeepSeek achieved a 10x efficiency boost in AI model training by bypassing the industry-standard CUDA and using Nvidia's PTX programming language instead. Employing 2,048 Nvidia H800 GPUs, they trained a 671-billion parameter MoE language model in just two months. This breakthrough stemmed from meticulous optimizations of Nvidia's PTX, including reconfiguring GPU resources and implementing advanced pipeline algorithms. While this approach has high maintenance costs, the drastic reduction in training expenses sent shockwaves through the market, even causing a significant drop in Nvidia's market capitalization.

AI