LLMs: The Unexpected Success of Document Ranking

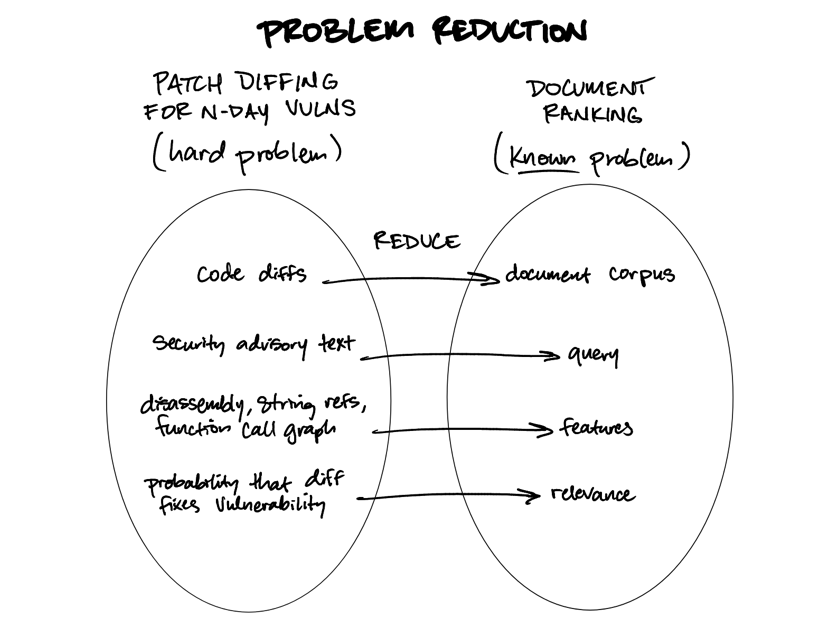

This paper argues that Large Language Models (LLMs) can be effectively used for listwise document ranking, and that surprisingly, some complex problems can be solved by transforming them into document ranking problems. The author demonstrates this by using patch diffing to locate N-day vulnerabilities. By reframing the problem as ranking diffs (documents) by their relevance to a security advisory (query), LLMs can efficiently pinpoint the specific function fixing a vulnerability. This technique has been validated at multiple security conferences and can be applied to other security problems such as fuzzing target selection and prioritization. Future improvements include analyzing ranked results and generating verifiable evidence, such as automatically generating testable proof-of-concept exploits.