AI: A Recursive Paradigm Shift

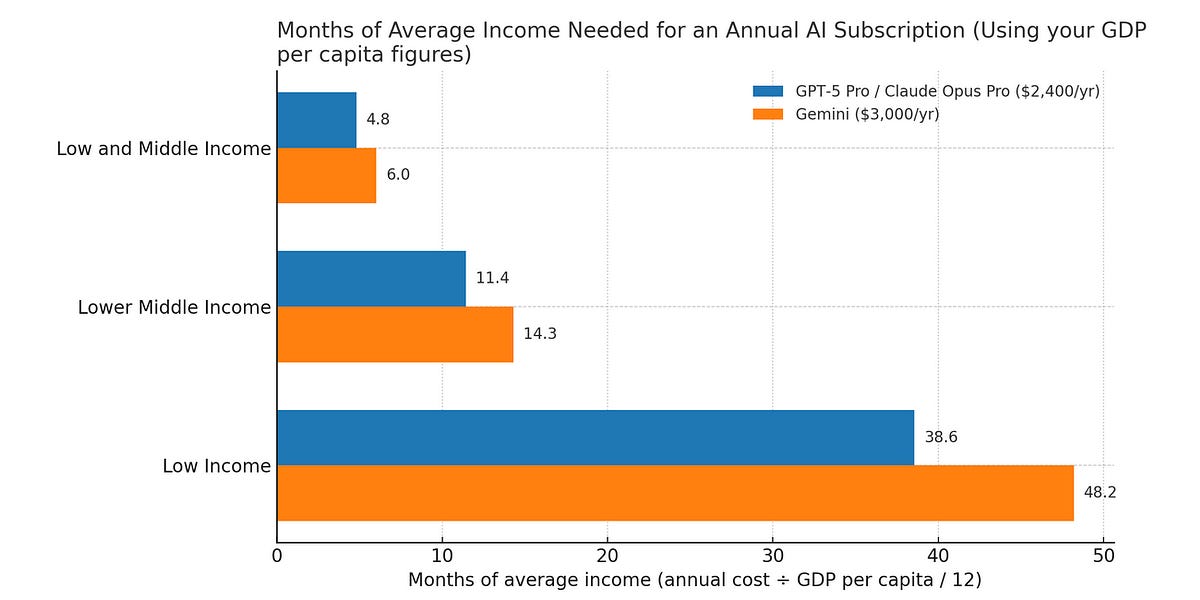

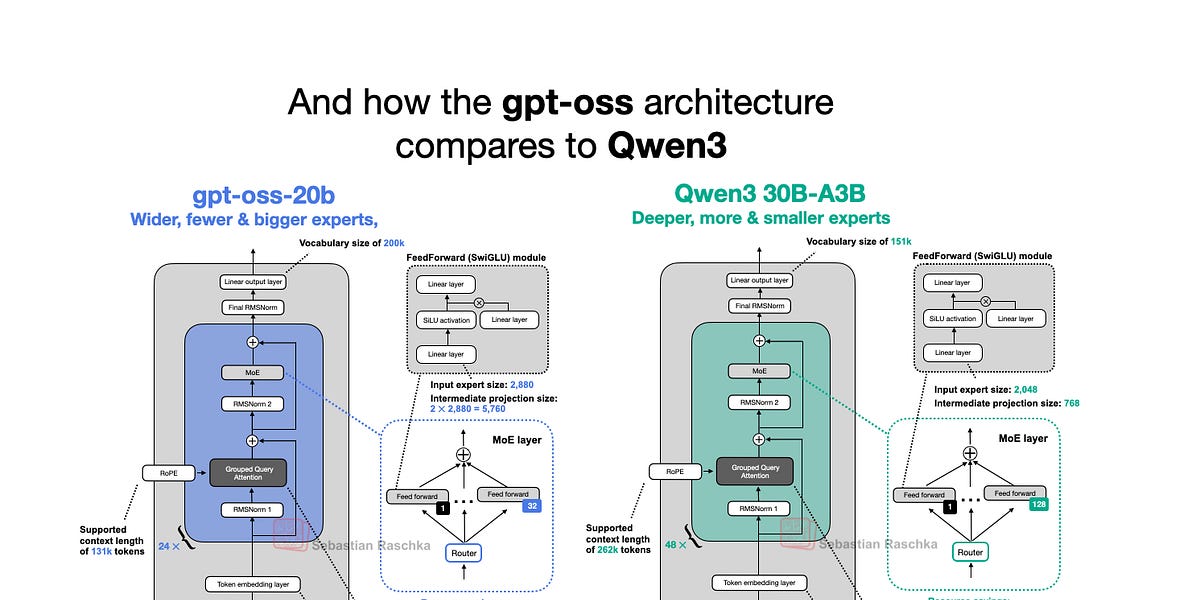

This article explores the revolutionary impact of Artificial Intelligence (AI) as a new General Purpose Technology (GPT). AI is not only changing how we access knowledge but also how we think, even triggering a recursive paradigm shift: software uses AI, AI uses software, AI builds software, and AI itself is software. The author argues that the rapid development of AI brings immense opportunities and challenges, requiring us to adapt and participate actively, exploring future AI applications and redefining our roles in technological transformation.