Running LLMs Locally: A Developer's Guide

2024-12-29

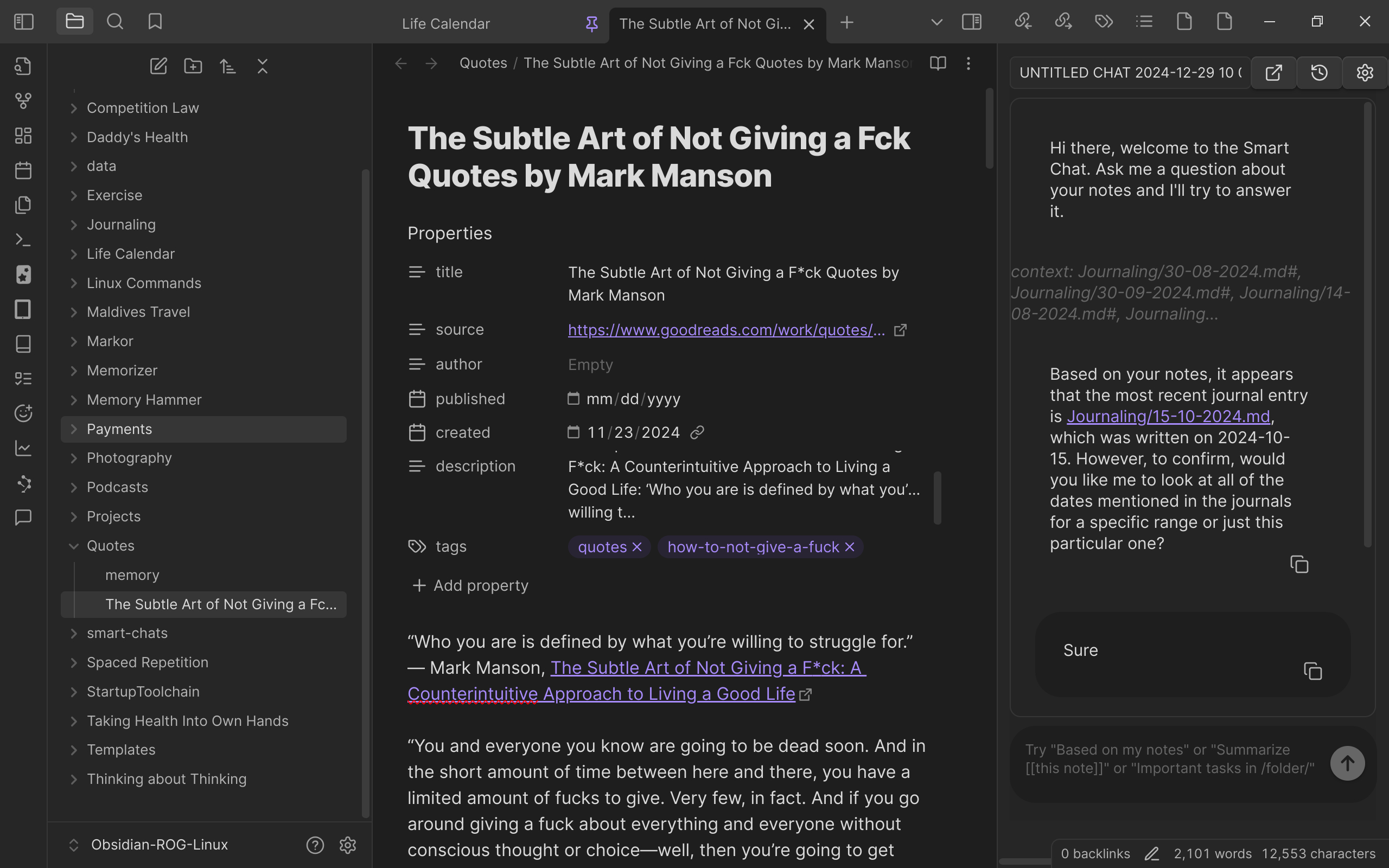

A developer shares their experience running Large Language Models (LLMs) on a personal computer. Using a high-spec machine (i9 CPU, 4090 GPU, 96GB RAM), along with open-source tools like Ollama and Open WebUI, they successfully run several LLMs for tasks such as code completion and note querying. The article details the hardware, software, models used, and update methods, highlighting the data security and low-latency advantages of running LLMs locally.