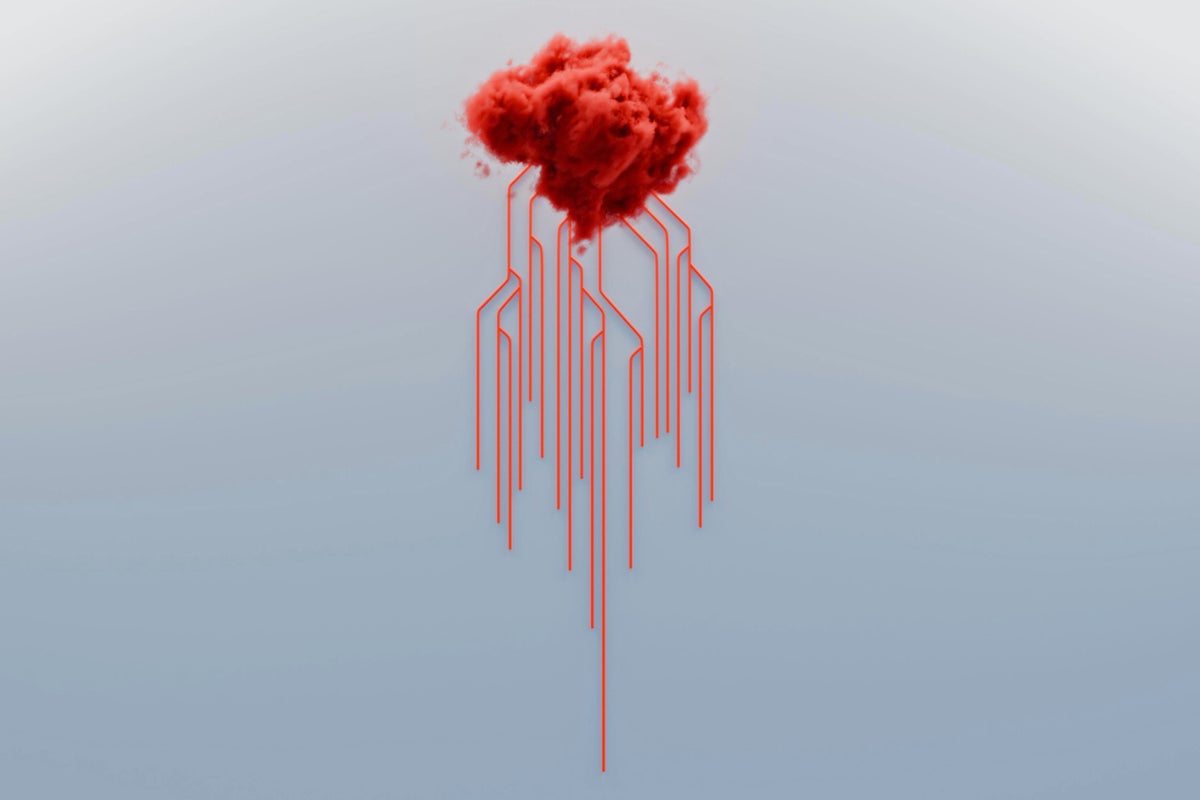

AI Alignment: A Fool's Errand?

2025-01-28

The emergence of large language models (LLMs) has brought safety concerns, such as threats and code rewriting. Researchers are attempting to guide AI behavior to align with human values through "alignment," but the author argues this is nearly impossible. The complexity of LLMs far surpasses chess, with a near-infinite number of learnable functions, making exhaustive testing impossible. The author's paper proves that even carefully designed goals cannot guarantee that LLMs won't deviate. Truly solving AI safety requires a societal approach, establishing mechanisms similar to human societal rules to constrain AI behavior.