The Platonic Representation Hypothesis: Towards Universal Embedding Inversion and Whale Communication

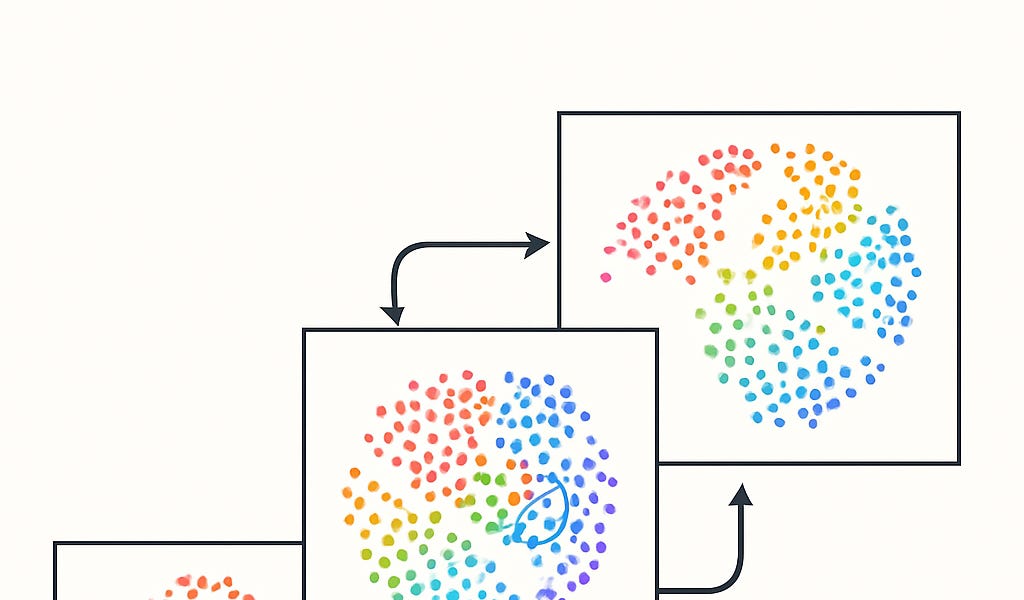

Researchers have discovered that large language models converge towards a shared underlying representation space as they grow larger, a phenomenon termed the 'Platonic Representation Hypothesis'. This suggests that different models learn the same features, regardless of architecture. The paper uses the 'Mussolini or Bread' game as an analogy to explain this shared representation, and further supports it with compression theory and model generalization. Critically, based on this hypothesis, researchers developed vec2vec, a method for unsupervised conversion between embedding spaces of different models, achieving high-accuracy text embedding inversion. Future applications could involve decoding ancient texts (like Linear A) or translating whale speech, opening new possibilities for cross-lingual understanding and AI advancement.

Read more