Fine-tuning LLMs: Knowledge Injection or Destructive Overwrite?

2025-06-11

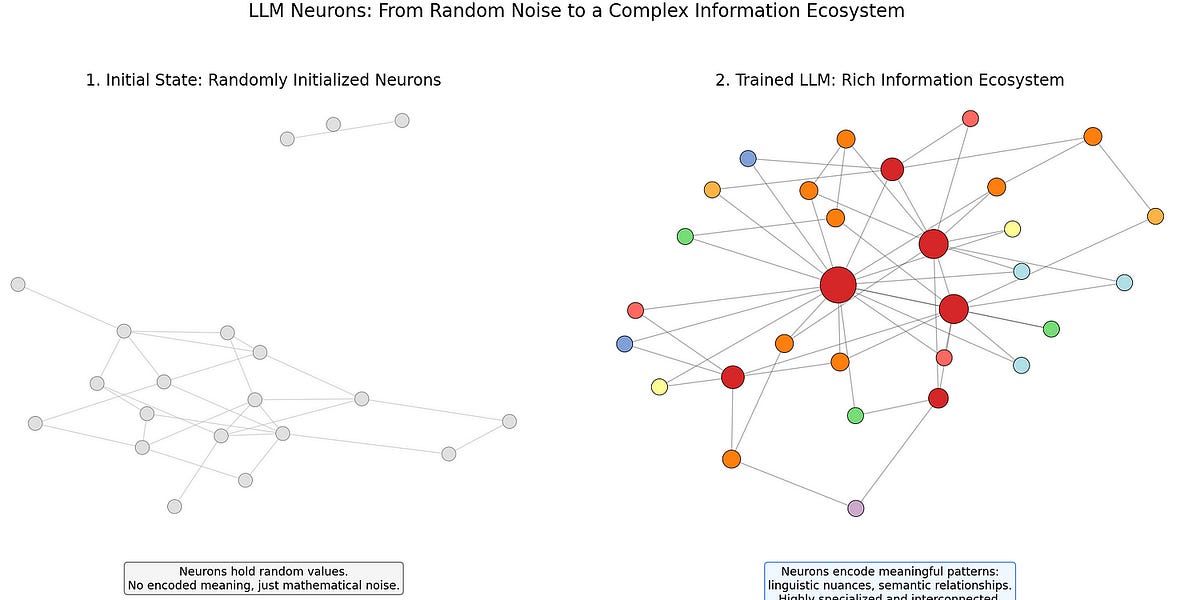

This article reveals the limitations of fine-tuning large language models (LLMs). The author argues that for advanced LLMs, fine-tuning isn't simply knowledge injection but can be destructive, overwriting existing knowledge structures. The article delves into how neural networks work and explains how fine-tuning can lead to the loss of crucial information within existing neurons, causing unexpected consequences. The author advocates for modular approaches such as retrieval-augmented generation (RAG), adapter modules, and prompt engineering to more effectively inject new knowledge without damaging the model's overall architecture.

Read more