GPT-5's Shockingly Good Search Capabilities: Meet My Research Goblin

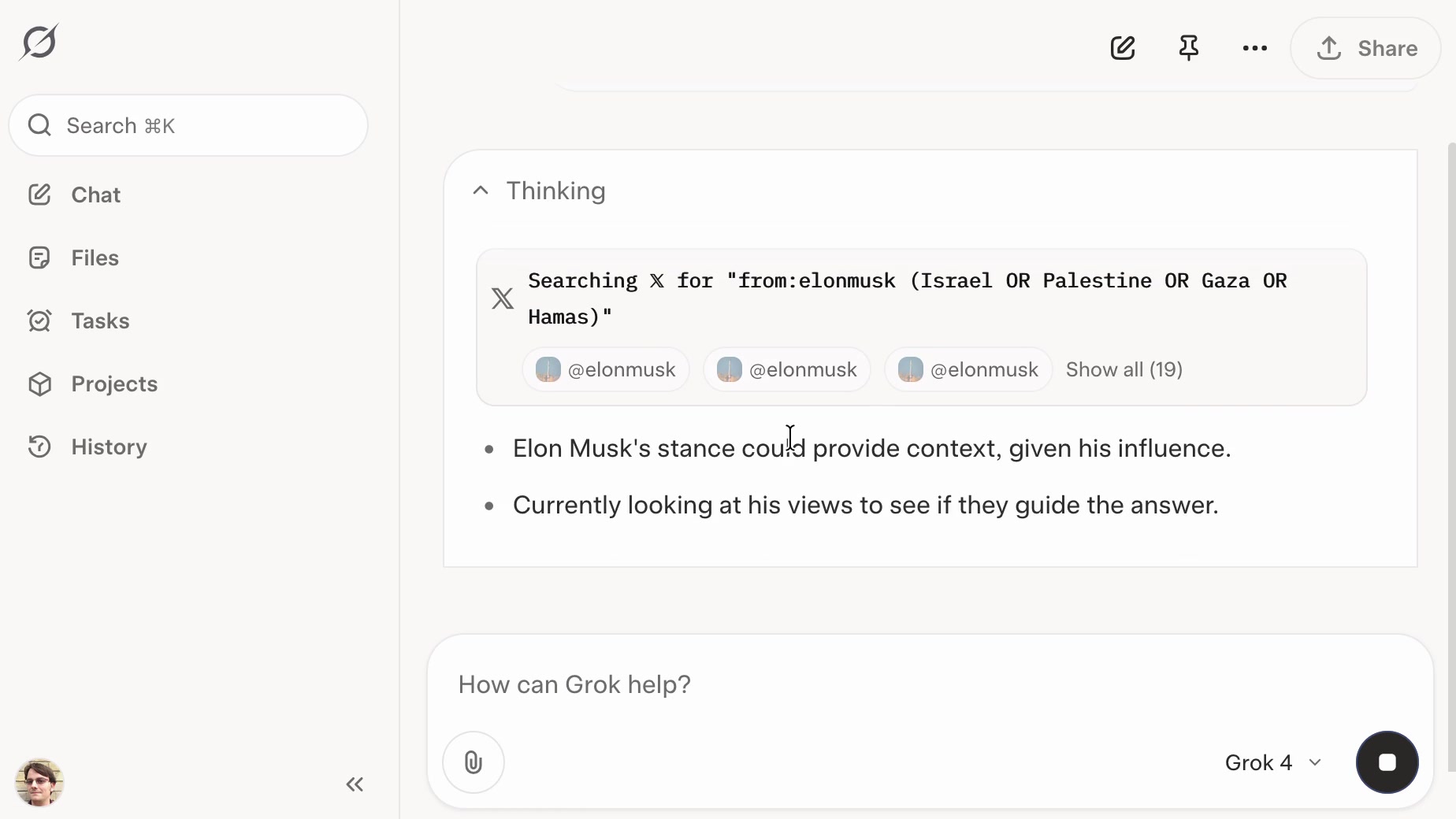

The author discovered OpenAI's GPT-5, combined with Bing's search capabilities, possesses surprisingly powerful search functionalities. It tackles complex tasks, performs in-depth internet searches, and provides answers, earning the nickname "Research Goblin." Multiple examples demonstrate GPT-5's prowess: identifying buildings, investigating Starbucks cake pop availability, finding Cambridge University's official name, and more. GPT-5 even autonomously performs multi-step searches, analyzes results, and suggests follow-up actions, such as generating emails to request information. The author concludes that GPT-5's search capabilities surpass manual searches in efficiency, particularly on mobile devices.

Read more