Apple's FastVLM: A Blazing-Fast Vision-Language Model

2025-07-24

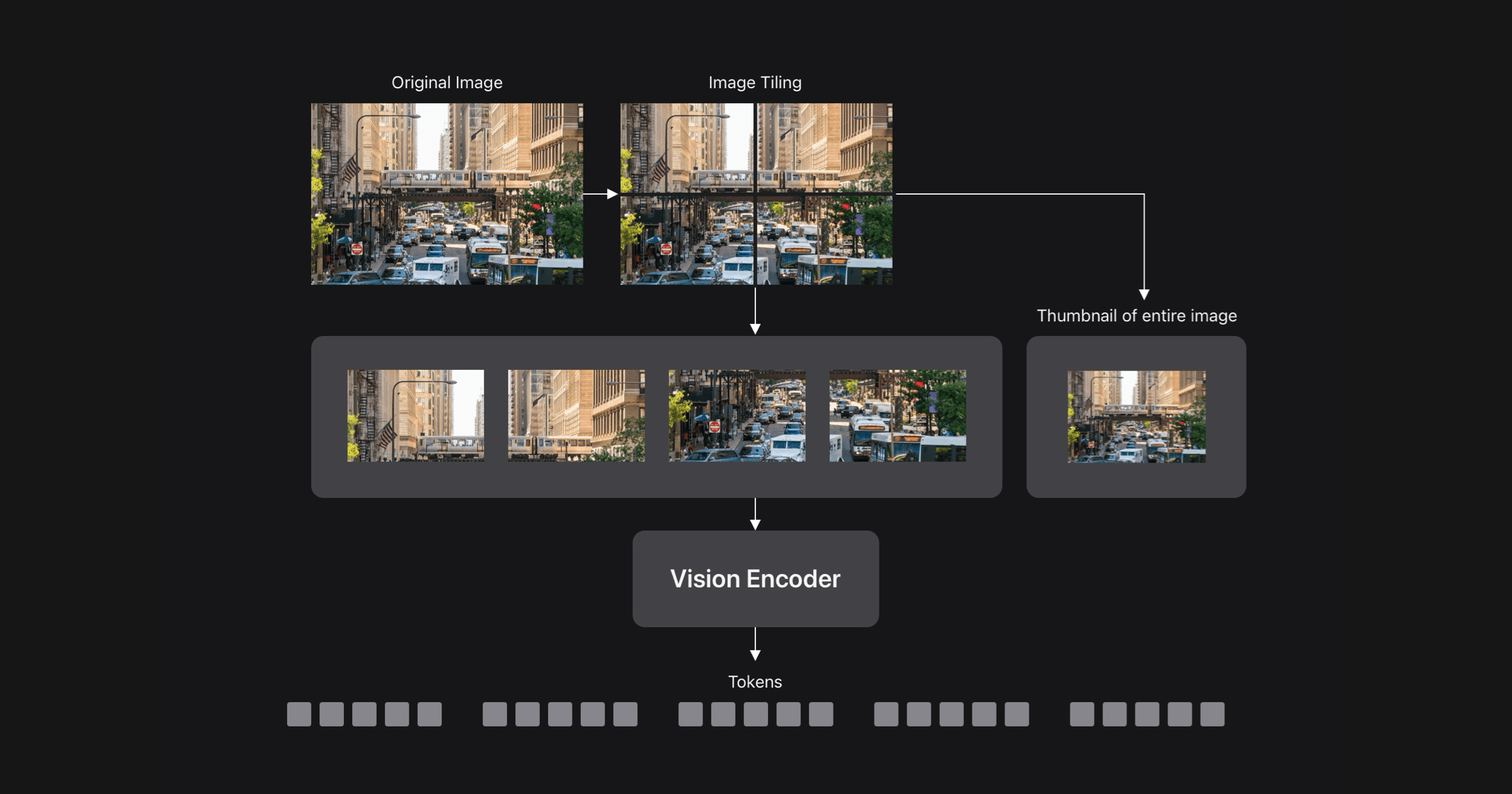

Apple ML researchers unveiled FastVLM, a novel Vision Language Model (VLM), at CVPR 2025. Addressing the accuracy-efficiency trade-off inherent in VLMs, FastVLM uses a hybrid-architecture vision encoder, FastViTHD, designed for high-resolution images. This results in a VLM that's significantly faster and more accurate than comparable models, enabling real-time on-device applications and privacy-preserving AI. FastViTHD generates fewer, higher-quality visual tokens, speeding up LLM pre-filling. An iOS/macOS demo app showcases FastVLM's on-device capabilities.