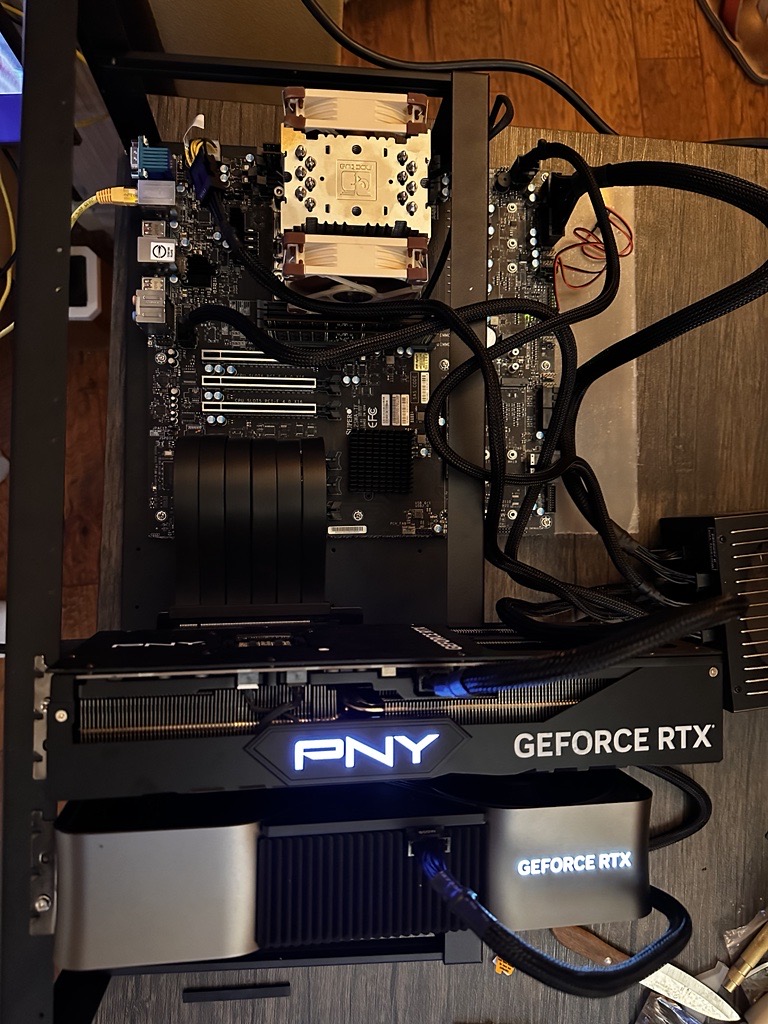

4x 4090 GPUs: Training Your Own LLMs Locally

2024-12-28

An AI enthusiast built a local rig for training Large Language Models (LLMs) using four NVIDIA 4090 GPUs, costing around $12,000. This setup can train models up to 1 billion parameters, though it performs optimally with around 500 million. The article details the hardware selection (motherboard, CPU, RAM, GPUs, storage, PSU, case, cooling), assembly process, software configuration (OS, drivers, frameworks, custom kernel), model training, optimization, and maintenance. Tips include using George Hotz's kernel patch for P2P communication on 4xxx GPUs. While highlighting the benefits of on-premise training, the author acknowledges the cost-effectiveness of cloud solutions for some tasks.