Outdated Info Lurks in LLMs: How Token Probabilities Create Logical Inconsistencies

2025-01-12

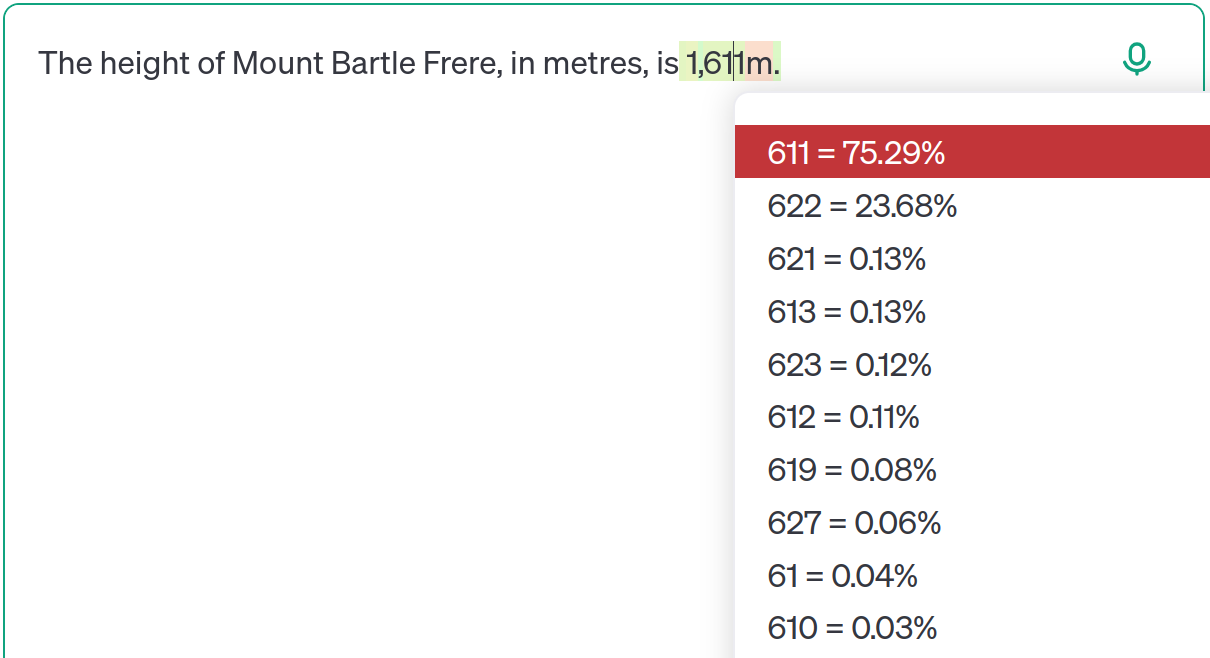

Large Language Models (LLMs) like ChatGPT, trained on massive internet datasets, often grapple with conflicting or outdated information. This article uses the height of Mount Bartle Frere as a case study, showing how LLMs don't always prioritize the most recent data. Instead, they predict based on probability distributions learned from their training data. Even advanced models like GPT-4o can output outdated information depending on subtle prompt variations. This isn't simple 'hallucination,' but a consequence of the model learning multiple possibilities and adjusting probabilities based on context. The author highlights the importance of understanding LLM limitations, avoiding over-reliance, and emphasizing transparency.