Algorithms Can't Understand Life: On the Non-Computational Nature of Relevance Realization

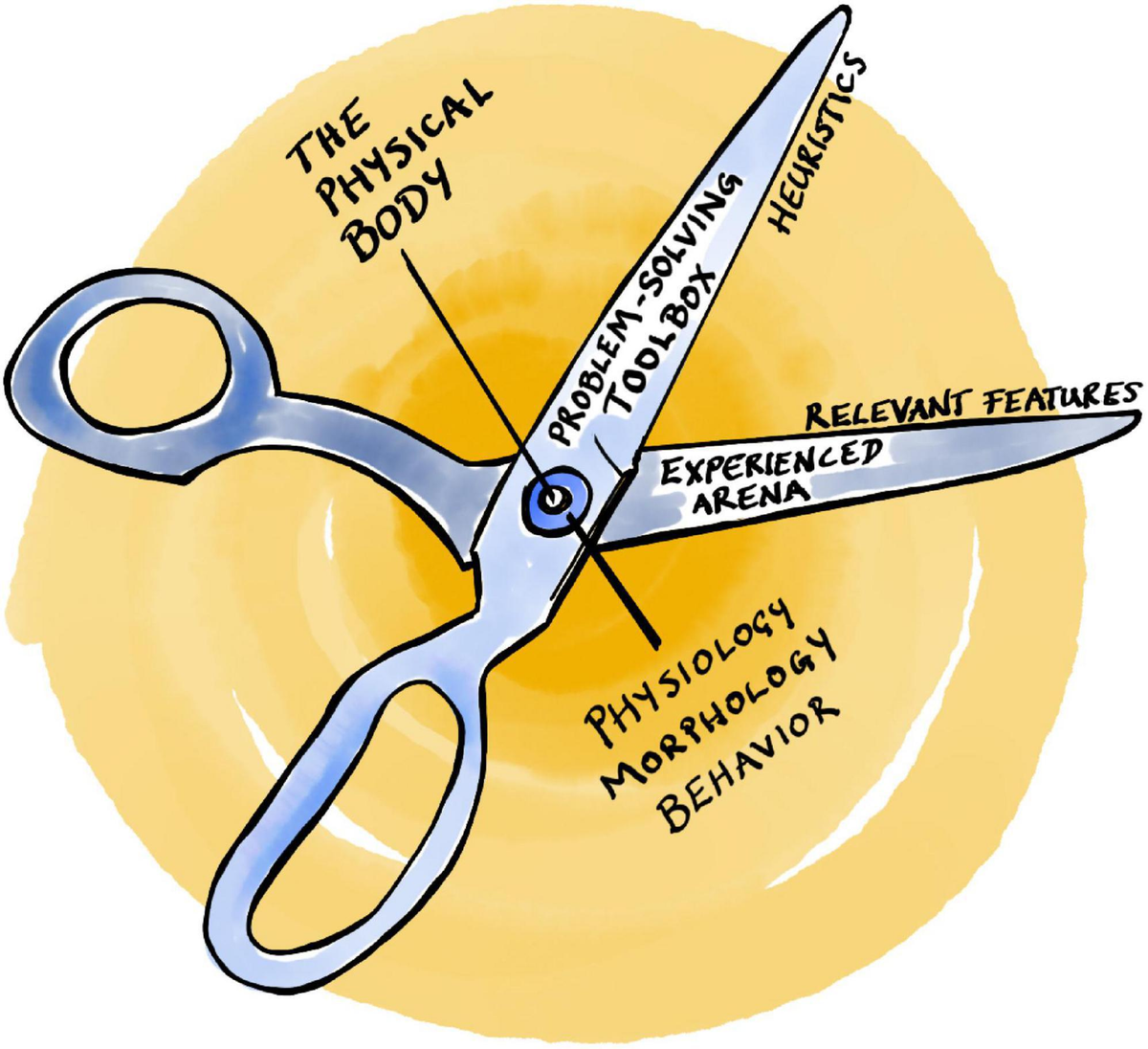

This article explores the fundamental difference between organisms and algorithms in how they know the world. Organisms inhabit a 'large world' overflowing with potential meaning, requiring 'relevance realization' to discern relevant environmental cues. Algorithms, conversely, exist within predefined 'small worlds,' incapable of autonomously solving the problem of relevance. The authors argue that relevance realization is not an algorithmic process but stems from the self-manufacturing dynamic organization of living matter. This enables organisms to act autonomously and anticipate the consequences of their actions. This ability is key to distinguishing living systems from non-living ones (like algorithms and machines) and offers a novel perspective on natural agency, cognition, and consciousness.