Annotated KAN: A Deep Dive into Kolmogorov-Arnold Networks

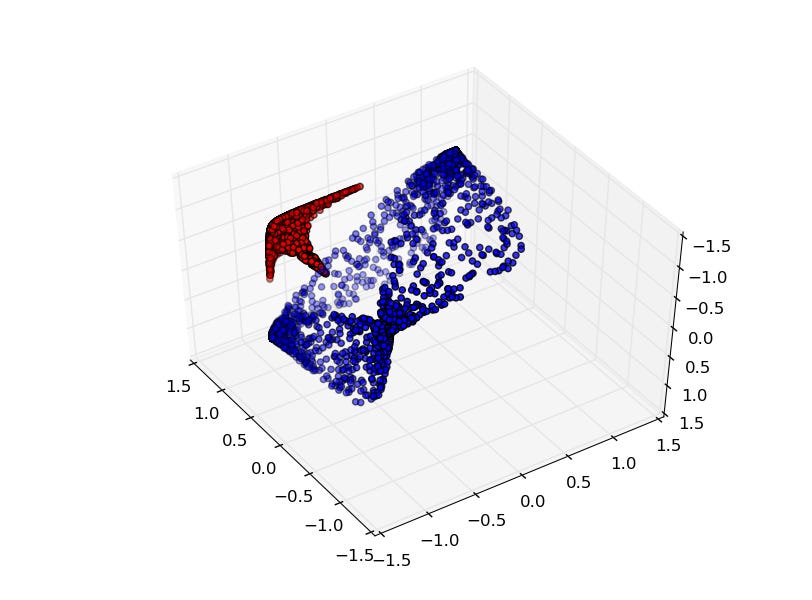

This post provides a comprehensive explanation of the architecture and training process of Kolmogorov-Arnold Networks (KANs), an alternative to Multi-Layer Perceptrons (MLPs). KANs parameterize activation functions by re-wiring the 'multiplication' in an MLP's weight matrix-vector multiplication into function application. The article details KAN's functionality, including a minimal KAN architecture, B-spline optimizations, regularization techniques, with code examples and visualization results. Applications of KANs, such as on the MNIST dataset, and future research directions like improving KAN efficiency are also explored.