MCPs: Who Controls the Future of AI?

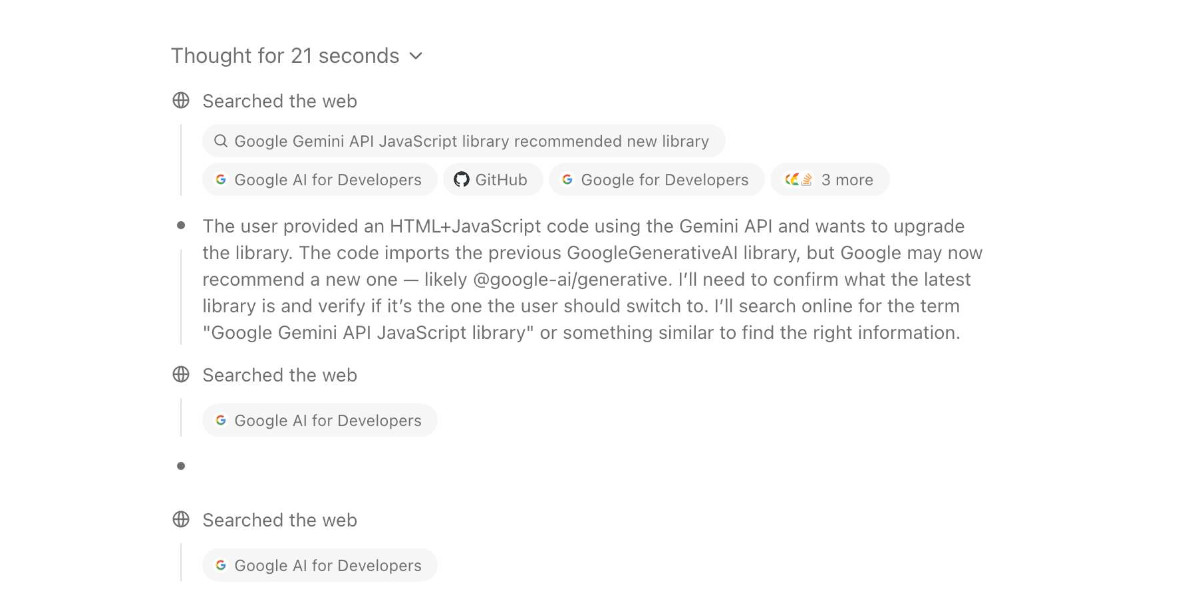

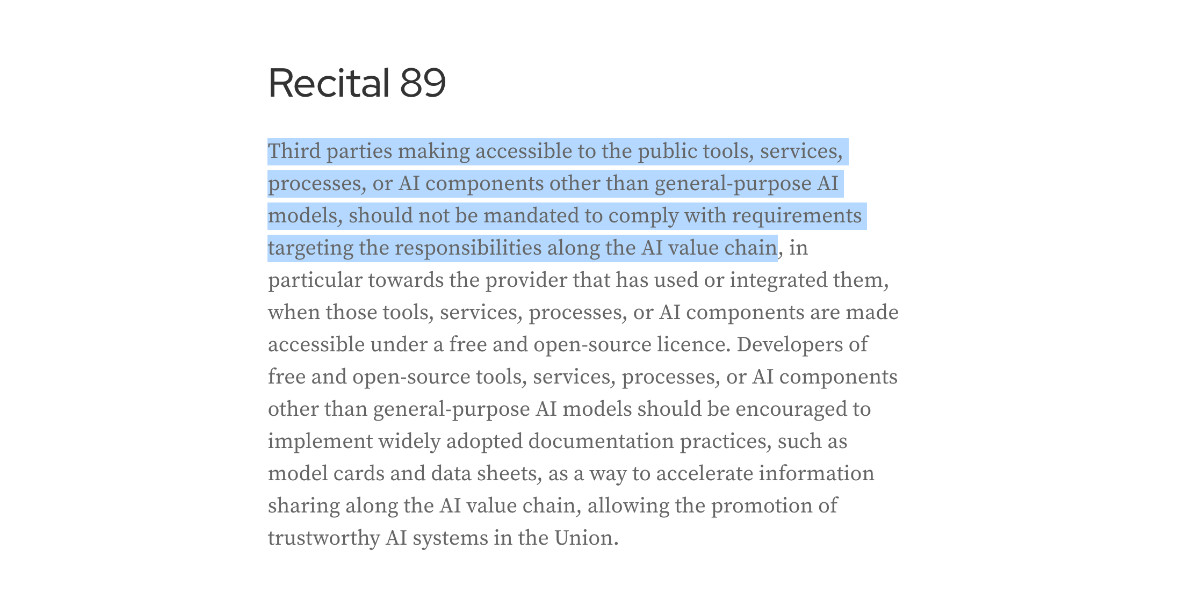

This article delves into the potential and limitations of Model Context Protocols (MCPs). MCPs, standardized APIs connecting external data sources to LLMs like ChatGPT, empower LLMs to access real-time data and perform actions. The author built two experimental MCP servers: one for code learning, the other connecting to a prediction market. While promising, MCPs currently suffer from poor user experience and significant security risks. Critically, LLM clients (like ChatGPT) will become the new gatekeepers, controlling MCP installation, usage, and visibility. This will reshape the AI ecosystem, mirroring Google's dominance in search and app stores. The future will see LLM clients deciding which MCPs are prioritized, even permitted, leading to new business models like MCP wrappers, affiliate shopping engines, and MCP-first content apps.