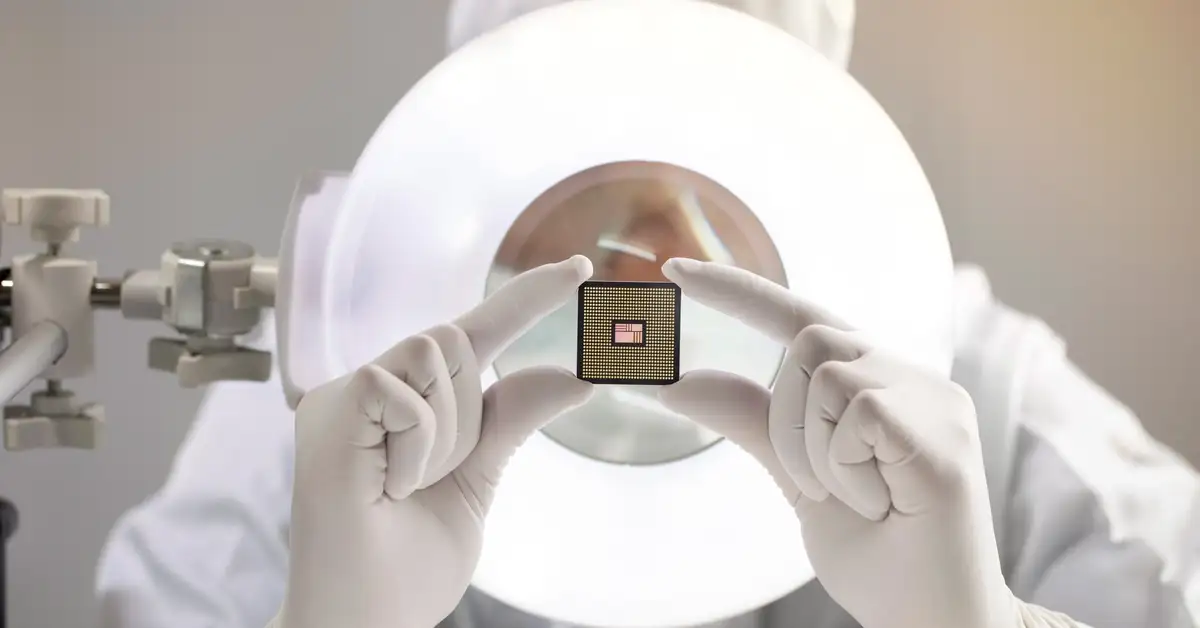

Google Unveils Ironwood: A 7th-Gen TPU for the Inference Age

At Google Cloud Next '25, Google announced Ironwood, its seventh-generation Tensor Processing Unit (TPU). This is Google's most powerful and scalable custom AI accelerator yet, designed specifically for inference. Ironwood marks a shift towards a proactive “age of inference,” where AI models generate insights and answers, not just data. Scaling up to 9,216 liquid-cooled chips interconnected via breakthrough ICI networking (nearly 10MW), Ironwood is a key component of Google Cloud's AI Hypercomputer architecture. Developers can leverage Google's Pathways software stack to easily harness the power of tens of thousands of Ironwood TPUs.