A 300 IQ AI: Omnipotent or Still Bound by Reality?

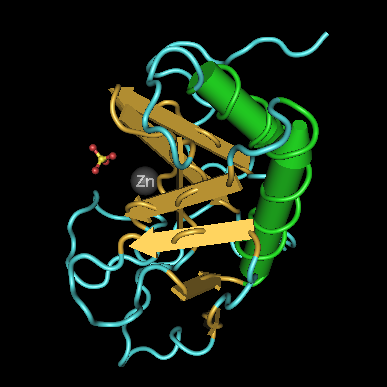

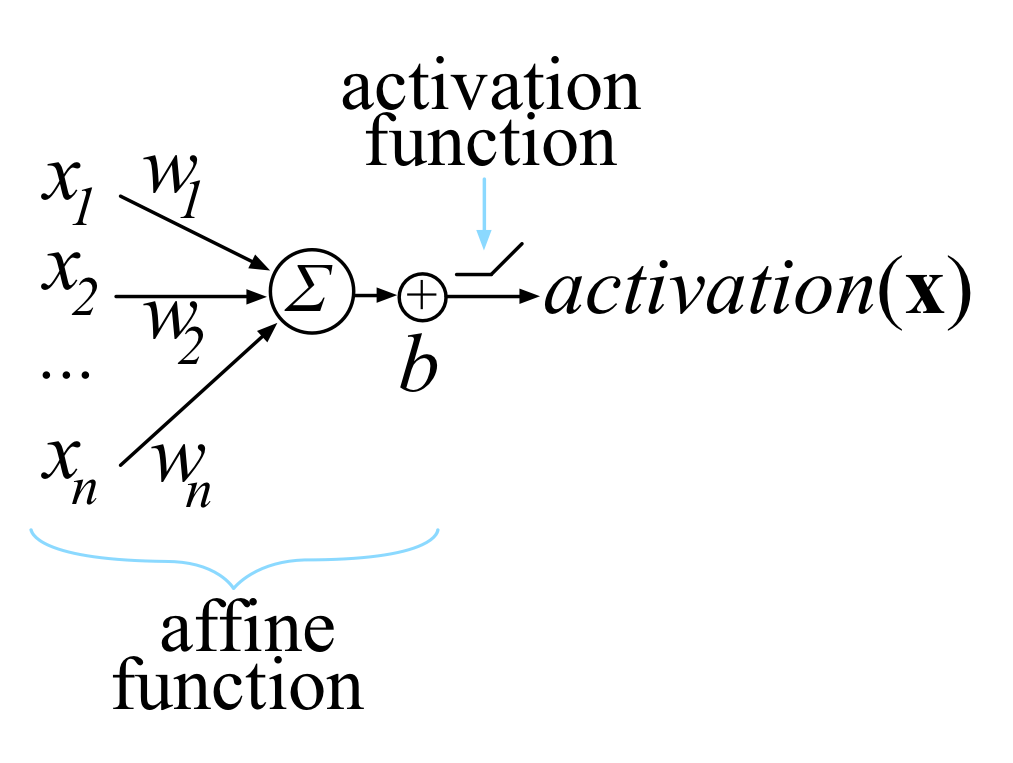

This article explores the limits of a super-intelligent AI with an IQ of 300 and a thought speed 10,000 times faster than a normal human. While the AI could rapidly solve problems in math, programming, and philosophy, the author argues its capabilities might be less impressive than expected in areas like weather prediction, predicting geopolitical events (e.g., predicting Trump's win), and defeating top chess engines. This is because these fields require not only intelligence but also vast computational resources, data, and physical experiments. Biology, in particular, is heavily reliant on accumulated experimental knowledge and tools, meaning the AI might not immediately cure cancer. The article concludes that the initial impact of super-AI might primarily manifest as accelerated economic growth, rather than an immediate solution to all problems, as its development remains constrained by physical limitations and feedback loops.