Managing Multi-Account AWS Architectures with Terraform Workspaces

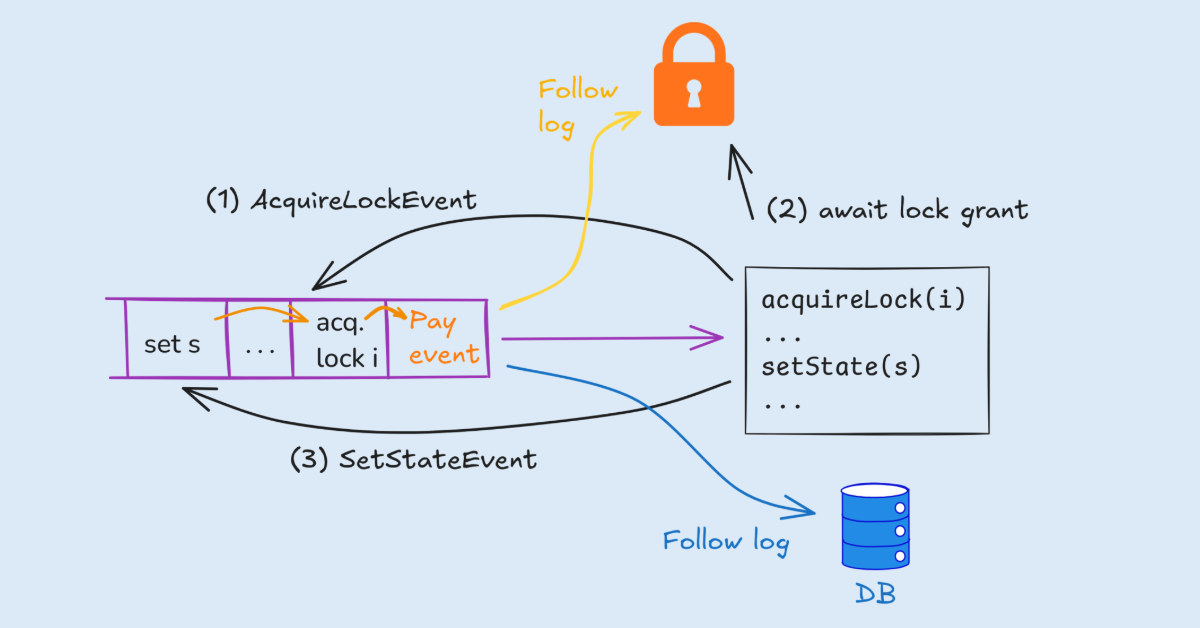

This article demonstrates managing multi-account AWS architectures using Terraform workspaces. The focus is on associating accounts with workspaces, without delving into modularity, security, or remote state storage. A local testing approach using Localstack is presented, leveraging OpenTofu as an open-source Terraform alternative. Different workspaces are created, dynamically loading variable files to manage configurations for different environments (e.g., development and UAT).

Read more

/cdn.vox-cdn.com/uploads/chorus_asset/file/25472451/STK270_GOOGLE_MAPS_A.png)