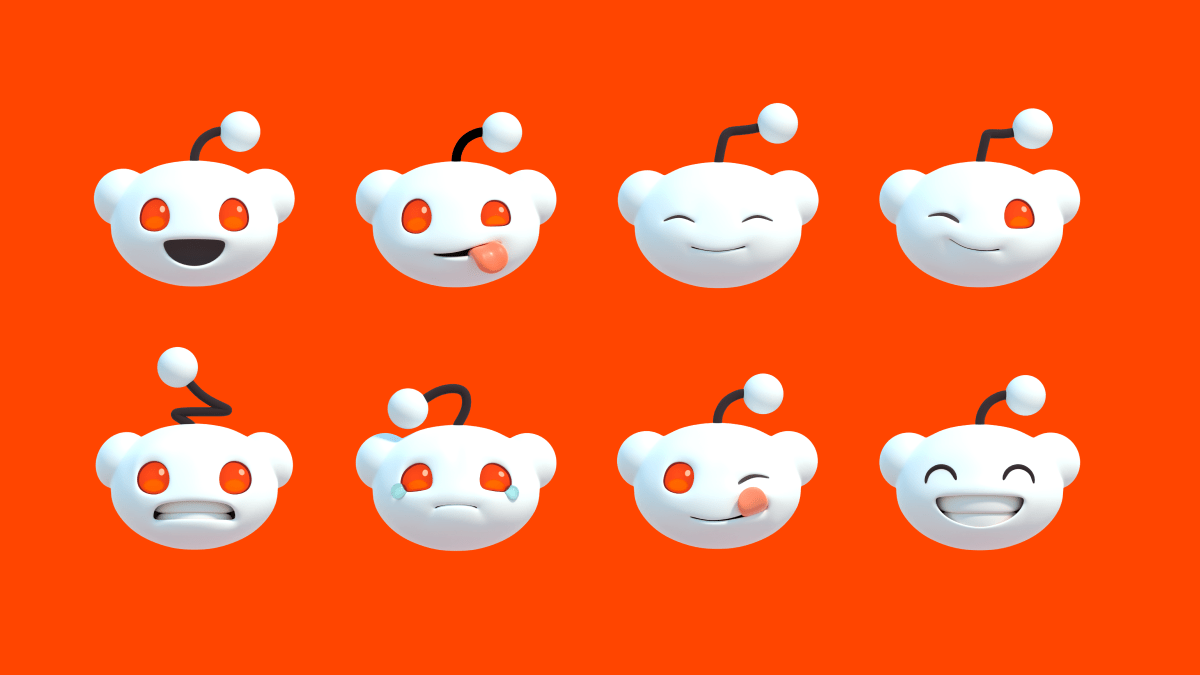

OpenAI Uses Reddit's r/ChangeMyView to Benchmark AI Persuasion

OpenAI leveraged Reddit's r/ChangeMyView subreddit to evaluate the persuasive abilities of its new reasoning model, o3-mini. The subreddit, where users post opinions and engage in debates, provided a unique dataset to assess how well the AI's generated responses could change minds. While o3-mini didn't significantly outperform previous models like o1 or GPT-4o, all demonstrated strong persuasive abilities, ranking in the top 80-90th percentile of human performance. OpenAI emphasizes that the goal isn't to create hyper-persuasive AI, but rather to mitigate the risks associated with excessively persuasive models. The benchmark highlights the ongoing challenge of securing high-quality datasets for AI model development.