OpenAI Accused of Training GPT-4o on Unauthorized Paywalled Books

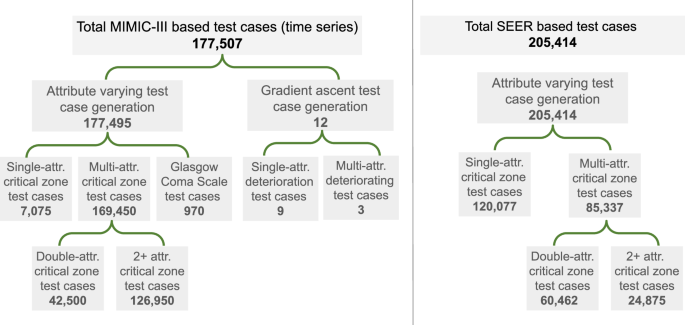

A new paper from the AI Disclosures Project accuses OpenAI of using unlicensed, paywalled books, primarily from O'Reilly Media, to train its GPT-4o model. The paper uses the DE-COP method to demonstrate that GPT-4o exhibits significantly stronger recognition of O'Reilly's paywalled content than GPT-3.5 Turbo, suggesting substantial unauthorized data in its training. While OpenAI holds some data licenses and offers opt-out mechanisms, this adds to existing legal challenges concerning its copyright practices. The authors acknowledge limitations in their methodology, but the findings raise serious concerns about OpenAI's data acquisition methods.