Sesame's CSM: Near-Human Speech, But Still in the Valley

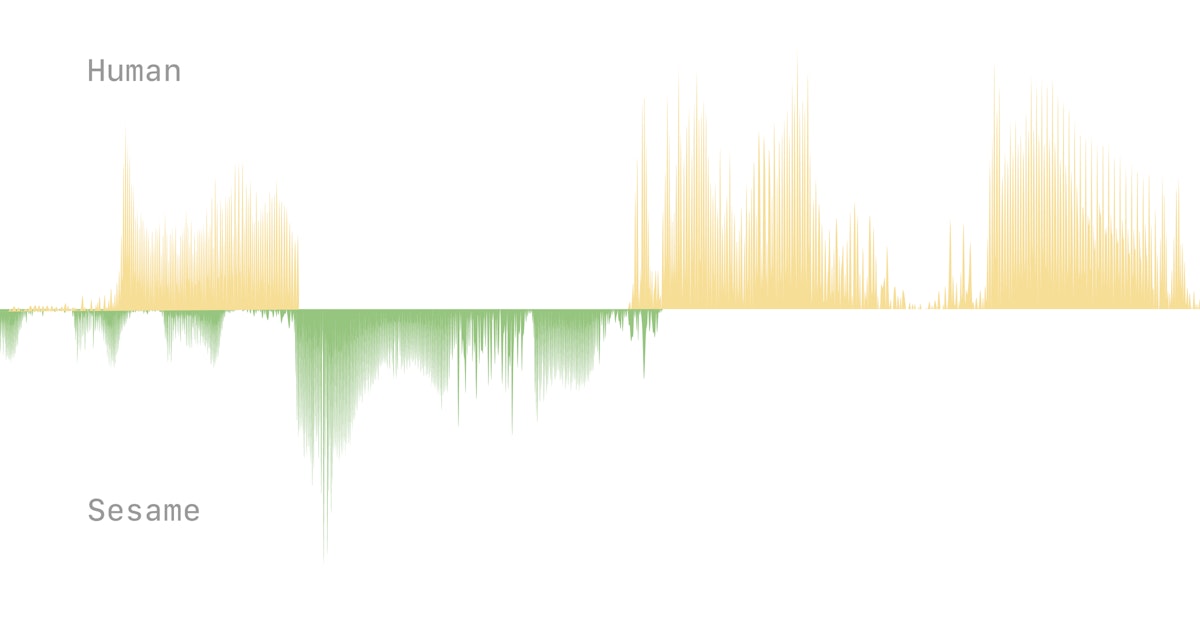

A video showcasing Sesame's new speech model, CSM, has gone viral. Built on Meta's Llama architecture, the model generates remarkably realistic conversations, blurring the line between human and AI. Using a single-stage, multimodal transformer, it jointly processes text and audio, unlike traditional two-stage methods. While blind tests show near-human quality for isolated speech, conversational context reveals a preference for real human voices. Sesame co-founder Brendan Iribe acknowledges ongoing challenges with tone, pacing, and interruptions, admitting the model is still under development but expressing optimism for the future.